The Hidden Risks of AI-Generated Code

The Hidden Risks of AI-Generated Code

AI is the new teammate who never sleeps. It drafts functions, fills in tests, and suggests whole files in a blink. Magic—until it isn’t. When a release goes sideways, it’s not enough to know what changed; you need to know who or what authored it and whether it passed the rules you trust.

Think of provenance like a seatbelt: most of the time you don’t notice it; the one time you need it, you’re very glad it’s there.

Below are the quiet ways AI-generated code can hurt your team—and the habits that keep you safe, fast, and audit-ready.

1) Security Blind Spots

AI can produce code that looks idiomatic but smuggles in risky patterns—subtle input parsing issues, missing checks, shaky crypto defaults. Static analysis and tests catch some of it, but the riskiest bugs hide in “works on my machine” paths.

Visibility is security. If you can see where AI wrote code, you can route those lines to deeper review, add tests where it matters, and gate merges when confidence is low.

2) Unknown Provenance = Policy & Trust Risk

You said you don’t love the “copyright” angle. Fair. The real issue is simpler:

- Unknown origin breaks policy. Many teams have rules: “AI contributions must be reviewed,” “certain files can’t include AI-generated code,” or “critical paths require extra sign-off.” If you can’t prove origin, you can’t enforce policy.

- Customers want proof, not vibes. Vendor questionnaires and audits ask how you control AI use in your SDLC. “We think we’re careful” doesn’t pass. Evidence does.

No provenance, no pass. Treat authorship like any other control: verify it in PR, store it as evidence, and block merges that fail the bar.

3) Quality Drift & Maintainability Debt

AI is great at plausible code. It’s less great at your code—your style, patterns, naming, and architecture. Over time, tiny deviations become a maintenance tax: harder diffs, brittle modules, mysterious helpers.

Confidence is a feature. Tag AI-authored lines, keep standards consistent, and send the right reviewers before small inconsistencies turn into weekend refactors.

4) Missing Context & Business Logic

Models don’t know your domain invariants, unwritten contracts, or that one migration from 2019 everyone is afraid to touch. You’ll get code that compiles, passes a happy-path test, and quietly violates a business rule two hops away.

Truth in every commit. Make it obvious when code was AI-authored so reviewers read with the right level of suspicion and add the missing guardrails.

5) Overreliance & Skill Atrophy

Autocomplete is a gift; it can also be a crutch. If the team stops asking “why,” you slowly trade judgment for speed. That’s fine—until something breaks and you need the judgment back.

Visibility before velocity. Keep the pace, but make the extra review step explicit when AI is involved. Curiosity stays sharp when it’s part of the workflow.

What “good” looks like (and takes minutes to set up)

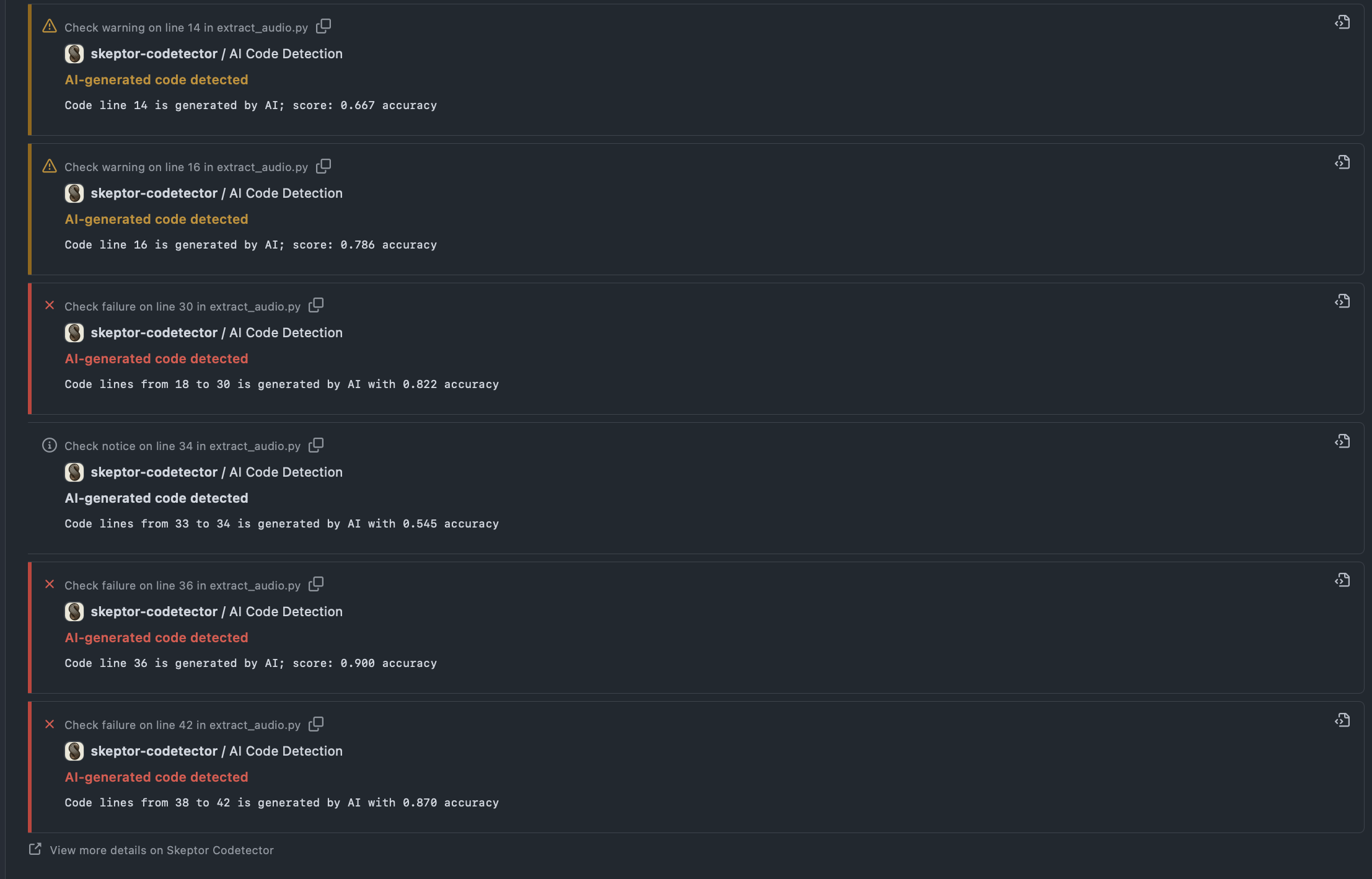

Proof in every PR. When a PR opens, reopens, or syncs, run an automated check that:

- Detects AI-authored code line by line and posts annotations with confidence scores.

- Applies notice / warning / failure levels based on thresholds you control.

- Routes flagged changes to the right owners or reviewers.

- Leaves an audit trail your security and compliance folks can point to.

Then flip the switch in Branch Protection: make the check required. If there’s no evidence or the score exceeds your policy, it doesn’t merge. Simple.

Autocomplete isn’t accountability. Provenance is.

Practical guardrails you can adopt today

- Define your rules. Where is AI-generated code allowed? Where is it allowed only with review? Where is it blocked?

- Tune thresholds. Start with warnings, learn from the noise, then graduate critical paths to failures.

- Exclude the obvious. Generated folders, vendored deps, and lockfiles rarely need scrutiny—save attention for meaningful code paths.

- Measure. Track how often AI contributes, where it creates risk, and how review changes outcomes.

Conclusion

AI is here to stay—and that’s good news. But speed without sight is how surprises ship. Add provenance to your pipeline, make policy enforceable, and give reviewers the context they need.

See the source. Ship with certainty.